I like to think of a lot of constructions as playing pretend. I'll go over the integers modulo $n$ for instance. Suppose we start to construct the naturals $0,1,2,\cdots$ and their additive inverses, then allow ourselves multiplication - but in our resulting theory we never specify when two numbers are not equal. Then we cannot derive any sort of contradiction from, say, $0=n$ (keep in mind that a contradiction is of the form $P\wedge\neg P$ for some proposition $P$). If we add this as an axiom and allow ourselves to "pretend" that $0$ and $n$ are the same, then the arithmetic we end up describing is in fact that of the integers mod $n$. Bottom line: if you can pretend there is an algebraic structure with such and such properties, and everything remains consistent, then there does exist said structure.

The philosophy of "playing pretend" may not exactly be a mental image per se, but it is a state of mind which allows us to operate with various types of heavy algebraic machinery in an easy and intuitive manner that I think is important to know about in the context of abstract algebra. Also, perhaps a set / model theorist / logician could formalize the concept of "pretend" - say, the "theory" of an algebraic structure being a collection of certain types of descriptions that can be made about it, and then "pretending" relations are true is tantamount to adjoining propositions to the underlying theory as axioms. My familiarity is too weak to make pronouncements on this.

The generalization of this is to think of quotients as pretending relations are true. Formally, we are creating an equivalence relation preserved by the ambient algebraic operations which is obtained by forming the collection of all "equations" that are obtained by performing algebra on a fixed set of relations. For instance, the abelianization $G^{\rm ab}:=G/[G,G]$ is obtained by adjoining commutativity relations $ab=ba$ (for each pair $a,b\in G$) to the theory of the group $G$ (same idea for a ring $R$). In this vein, every group $G$ is the quotient of the free group whose alphabet is the underlying set of $G$ by the collection of relations encoded in the multiplication table for $G$.

As a fun and simple example, in my abstract algebra class we once were trying to find an example of a ring $R$ for which the polynomial ring $R[x]$ contained a nonconstant idempotent (we had already seen that $\deg (f\cdot g)=\deg f+\deg g$ needed to be amended to $\le$ when the base ring contained zero divisors). The simplest case would be to consider linear polynomials, i.e. $(ax+b)^2=ax+b$ which rearranges to $a^2x^2+(2ab-a)x+(b^2-b)=0$, so we can simply make $a,b$ formal variables and set $R={\bf Z}[a,b]/(a^2,2ab-a,b^2-b)$ so that $ax+b$ is idempotent in $R[x]$.

It should go without saying that, the more things we are pretending are true about a structure, the more restrained and constricted this structure must end up being. Sometimes we create a trivial object in the process. Another way of saying this is, in an algebraic structure various things might be distinct but once we pretend two things are equal that we didn't consider equal before, a domino effect occurs in which a whole bunch of things suddenly do become equal.

The notion of freeness in this context is only pretending as much as is strictly necessary. If you have a set $X$ and want to form the free group out of it, you at the very least have to assume you can multiply and invert elements of $X$ and that the resulting group has some identity element, but beyond this nothing else needs to be assumed - thus, if you assume any further relations you are ending up with a quotient of the free group.

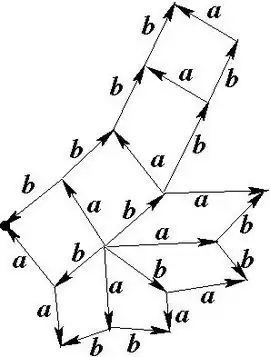

Similarly to create the free product $A*B$, you at the very least need to keep the multiplication tables for $A$ and $B$, and need to multiply elements of $A$ against those of $B$, but beyond this nothing else need be assumed. Pretending $A$ and $B$ commute against each other yields the direct sum as a quotient of the free product: $A\oplus B\cong (A*B)/[A,B]$ (where we view $A$ and $B$ as subgroups of the free product). The semidrect product $N\rtimes H$ can be formed by quotienting the free product $N*H$ by the relations $hnh^{-1}=h(n)$ for all $n\in N,h\in H$, which is to say that we "pretend" that conjugation by $H$ is the same as applying automorphisms.

On this interpretation, if $A$ and $B$ are quotients of free groups $A=FX/R$ and $B=FY/S$ (where $X,Y$ are disjoint and $R,S$ are relations, or more directly group-theoretically the subgroups generated by the words considered equal to the identity via the relations we desire to impose), then the free product is easily understood to be $FX/R*FY/S\cong F(X\sqcup Y)/\langle R\cup S\rangle$.

Often lie algebras $\frak g$ are thought of as subalgebras of an endomorphism algebra with the standard commutator bracket as the lie bracket. This allows both algebra-type multiplication and the lie bracket operation on the structure. However, just as abstract groups need not be thought of as symmetries or functions, we need not think of $\frak g$ directly as having any sort of multiplication operation (and therefore no commutator bracket), only the abstract lie bracket $[\cdot,\cdot]$ satisfying the given axioms. To create the universal enveloping algebra $U({\frak g})$ out of the full tensor algebra $T({\frak g})\cong\bigoplus_{n\ge0}{\frak g}^{\otimes n}$ we pretend we can multiply elements of $\frak g$ together - we subject this to the distributive property $a(b+c)=ab+ac$ - and then on top of this we pretend that the abstract lie bracket is in fact the commutator, i.e. quotient by the relations $[a,b]=ab-ba$ for all $a,b\in T$.

If $H\le G$ is a subgroup and $V$ is a representation of $H$ (basically a $K[H]$-module) then to create a $G$-rep out of this we allow ourselves to pretend there are actions of $G$ on $V$, subject to the subgroup $H$ acting in the already understood way. This induced representation thus is formed by "extending the scalars" of $V$ from $K[H]$ to $K[G]$: this is the isomorphism ${\rm Ind}_H^GV\cong K[G]\otimes_{K[H]}V$. More generally, tensoring against a ring extension allows us to form a module (or algebra) by pretending we have more scalars to multiply than we had before. For more useful applications of the tensor product, see the answers given to the questions here and here.

In the links it is also explained that the difference between tensor product and direct sum can be understood quantum-mechanically, in which we view vector spaces as possible formal linear combinations of basis vectors - i.e. superpositions of pure states of a physical system, and the essence of superposition in QM (informally - this is my opinion) is pretending a system can be in a mixture of classical states, so I'd classify this under the same banner.

Other items I want to mention on this topic are fraction fields and virtual representations (both examples of a Grothendieck-type construction that in some sense allows us to 'complete' an 'incomplete' algebraic structure). Whereas quotients contract structures into smaller ones, and extension of scalars pulls scalars from somewhere else we already have on hand, the fraction field creates fractions out of the existing structure. That is, given an integral domain $R$, we form pretend fractions $a/b$ for $a,b\in R$ and then subject it to the obvious rules e.g. $a/b+c/d=(ad+bc)/(cd)$.

Representations of a given group $G$ over a field $K$ can be combined through either direct sums or tensor products, and these still satisfy distributivity $(A\oplus B)\otimes C\cong(A\otimes C)\oplus(B\otimes C)$; in this way the representations form a semiring (with the trivial representation as the multiplicative one) in which we can add and multiply, but we don't have any notion of subtraction that is necessary to have a bona fide ring. Thus, we simply pretend we can subtract, and we get the representation (aka Green) ring ${\rm Rep}(G)$ (we may tensor to obtain larger sets of scalars); the elements of the resulting ring are called virtual representations.

In the same way, $G$-sets (sets equipped with $G$-actions) may be multiplied through Cartesian products and added through disjoint union, and when we pretend we can subtract things we obtain the so-called Burnside ring (which may be viewed as a subring of the representation ring via the process of linearizing $G$-sets into spaces which are $G$-reps).

Probably more things can be thought of as pretend-new-things-are-true-about-an-existing-object, like say direct limits or fibred coproducts etc. but this is what comes to mind.