In Thomas's Calculus (11th edition), it is mentioned (Section 3.8 pg 225) that the derivative $dy/dx$ is not a ratio. Couldn't it be interpreted as a ratio, because according to the formula $dy = f'(x) \, dx$ we are able to plug in values for $dx$ and calculate a $dy$ (differential)? Then, if we rearrange we get $dy/dx=f'(x)$, and so $dy/dx$ can be seen as a ratio of $dy$ and $dx$. I wonder if the author says this because $dx$ is an independent variable, and $dy$ is a dependent variable, and for $dy/dx$ to be a ratio both variables need to be independent.

-

6http://math.stackexchange.com/questions/1548487/is-this-a-correct-good-way-to-think-interpret-differentials-for-the-beginning-ca/1590019#1590019 – Dec 26 '15 at 22:58

-

2It can be roughly interpreted as a rate of change of $y$ as a function of $x$ . This statement though has many lacks. – Aditya Guha Roy Aug 28 '17 at 17:39

-

dy compared to dx is How I look at it. – mick Jun 10 '18 at 21:50

-

8It may be of interest to record Russell's views of the matter: "Leibniz's belief that the Calculus had philosophical importance is now known to be erroneous: there are no infinitesimals in it, and $dx$ and $dy$ are not numerator and denominator of a fraction." (Bertrand Russell, RECENT WORK ON THE PHILOSOPHY OF LEIBNIZ. Mind, 1903). – Mikhail Katz May 01 '14 at 17:10

-

1Yes, an error indeed, and one that he elaborated on in embarrassing detail in his *Principles of Mathematics*. @TobyBartels – Mikhail Katz Apr 30 '17 at 12:20

-

8So yet another error made by Russell, is what you're saying? – Toby Bartels Apr 29 '17 at 04:24

-

See also the related question at MathOverflow, https://mathoverflow.net/questions/73492/how-misleading-is-it-to-regard-fracdydx-as-a-fraction – Gerry Myerson Jun 26 '20 at 00:01

-

It is always a ratio, since infinitesimals never reach zero value. The result may not be affected by variable(s) (in)dependency - 'first thought'. – usiro Jul 29 '20 at 19:20

-

I think it is not meant to be interpreted as quotient, as Arturo pointed it in his answer. Nonetheless, I would like to add my point. When we solve a DE like $dy/dx = x$, we normally write it as $dy = x\ dx$ and integrate which seems like it is a quotient, though it's not! – ultralegend5385 Oct 29 '20 at 04:26

-

https://math.stackexchange.com/questions/4067053/a-way-to-write-derivatives/4067142#4067142 – Aderinsola Joshua Mar 27 '21 at 09:52

26 Answers

Historically, when Leibniz conceived of the notation, $\frac{dy}{dx}$ was supposed to be a quotient: it was the quotient of the "infinitesimal change in $y$ produced by the change in $x$" divided by the "infinitesimal change in $x$".

However, the formulation of calculus with infinitesimals in the usual setting of the real numbers leads to a lot of problems. For one thing, infinitesimals can't exist in the usual setting of real numbers! Because the real numbers satisfy an important property, called the Archimedean Property: given any positive real number $\epsilon\gt 0$, no matter how small, and given any positive real number $M\gt 0$, no matter how big, there exists a natural number $n$ such that $n\epsilon\gt M$. But an "infinitesimal" $\xi$ is supposed to be so small that no matter how many times you add it to itself, it never gets to $1$, contradicting the Archimedean Property. Other problems: Leibniz defined the tangent to the graph of $y=f(x)$ at $x=a$ by saying "Take the point $(a,f(a))$; then add an infinitesimal amount to $a$, $a+dx$, and take the point $(a+dx,f(a+dx))$, and draw the line through those two points." But if they are two different points on the graph, then it's not a tangent, and if it's just one point, then you can't define the line because you just have one point. That's just two of the problems with infinitesimals. (See below where it says "However...", though.)

So Calculus was essentially rewritten from the ground up in the following 200 years to avoid these problems, and you are seeing the results of that rewriting (that's where limits came from, for instance). Because of that rewriting, the derivative is no longer a quotient, now it's a limit: $$\lim_{h\to0 }\frac{f(x+h)-f(x)}{h}.$$ And because we cannot express this limit-of-a-quotient as a-quotient-of-the-limits (both numerator and denominator go to zero), then the derivative is not a quotient.

However, Leibniz's notation is very suggestive and very useful; even though derivatives are not really quotients, in many ways they behave as if they were quotients. So we have the Chain Rule: $$\frac{dy}{dx} = \frac{dy}{du}\;\frac{du}{dx}$$ which looks very natural if you think of the derivatives as "fractions". You have the Inverse Function theorem, which tells you that $$\frac{dx}{dy} = \frac{1}{\quad\frac{dy}{dx}\quad},$$ which is again almost "obvious" if you think of the derivatives as fractions. So, because the notation is so nice and so suggestive, we keep the notation even though the notation no longer represents an actual quotient, it now represents a single limit. In fact, Leibniz's notation is so good, so superior to the prime notation and to Newton's notation, that England fell behind all of Europe for centuries in mathematics and science because, due to the fight between Newton's and Leibniz's camp over who had invented Calculus and who stole it from whom (consensus is that they each discovered it independently), England's scientific establishment decided to ignore what was being done in Europe with Leibniz notation and stuck to Newton's... and got stuck in the mud in large part because of it.

(Differentials are part of this same issue: originally, $dy$ and $dx$ really did mean the same thing as those symbols do in $\frac{dy}{dx}$, but that leads to all sorts of logical problems, so they no longer mean the same thing, even though they behave as if they did.)

So, even though we write $\frac{dy}{dx}$ as if it were a fraction, and many computations look like we are working with it like a fraction, it isn't really a fraction (it just plays one on television).

However... There is a way of getting around the logical difficulties with infinitesimals; this is called nonstandard analysis. It's pretty difficult to explain how one sets it up, but you can think of it as creating two classes of real numbers: the ones you are familiar with, that satisfy things like the Archimedean Property, the Supremum Property, and so on, and then you add another, separate class of real numbers that includes infinitesimals and a bunch of other things. If you do that, then you can, if you are careful, define derivatives exactly like Leibniz, in terms of infinitesimals and actual quotients; if you do that, then all the rules of Calculus that make use of $\frac{dy}{dx}$ as if it were a fraction are justified because, in that setting, it is a fraction. Still, one has to be careful because you have to keep infinitesimals and regular real numbers separate and not let them get confused, or you can run into some serious problems.

- 5,721

- 5

- 24

- 50

- 356,881

- 50

- 750

- 1,081

-

225As a physicist, I prefer Leibniz notation simply because it is dimensionally correct regardless of whether it is derived from the limit or from nonstandard analysis. With Newtonian notation, you cannot automatically tell what the units of $y'$ are. – rcollyer Mar 10 '11 at 16:34

-

49Have you any evidence for your claim that "England fell behind Europe for centuries"? – Kevin H. Lin Mar 21 '11 at 22:05

-

142@Kevin: Look at the history of math. Shortly after Newton and his students (Maclaurin, Taylor), all the developments in mathematics came from the Continent. It was the Bernoullis, Euler, who developed Calculus, not the British. It wasn't until Hamilton that they started coming back, and when they reformed math teaching in Oxford and Cambridge, they adopted the continental ideas and notation. – Arturo Magidin Mar 22 '11 at 01:42

-

47Mathematics really did not have a firm hold in England. It was the Physics of Newton that was admired. Unlike in parts of the Continent, mathematics was not thought of as a serious calling. So the "best" people did other things. – André Nicolas Jun 20 '11 at 19:02

-

45There's a free calculus textbook for beginning calculus students based on the nonstandard analysis approach [here](http://www.math.wisc.edu/~keisler/). Also there is a monograph on infinitesimal calculus aimed at mathematicians and at instructors who might be using the aforementioned book. – tzs Jul 06 '11 at 16:24

-

8This is a great post, very informative and well written, but it doesn't really answer the question. That is to say, it doesn't show how $dy$ and $dx$ can be interpreted as fixed quantities when working with differentials. – Brendan Cordy Nov 05 '11 at 16:42

-

1@Brendan: Right; I'm addressing the question in the title and the main thrust: does $\frac{dy}{dx}$ represent a quotient or not? It does not (in the usual setting). For a discussion of differentials, see [here](http://math.stackexchange.com/questions/23902/what-is-the-practical-difference-between-a-differential-and-a-derivative/23914#23914). – Arturo Magidin Nov 05 '11 at 20:38

-

6Theres also another approach to infinitesimals which is also geometric and is close to the intuition that Newton & Leibniz used - that is smooth synthetic geometry. Basically they rigorous the idea of an infinitely small tangent/number dx, which when squared goes to zero. – Mozibur Ullah Mar 11 '12 at 17:41

-

2Thanks, this helped me sort through my thoughts immensely. As an engineer, I have to admit that I've been plodding along with a very Leibniz like feel for calculus. It's not that the limit interpretation doesn't make sense, it's just hard to think about limits and squeeze theorem every time you're trying to get a sense of the physical quantities you're manipulating. – Asad Saeeduddin Oct 01 '13 at 00:51

-

I perfer $\frac{\Delta y}{\Delta x}$ instead of $\frac{dy}{dx}$. After all, the $dx$ expression came from abbreviating _"delta $x$"_. Using $\Delta$ instead makes it more clear that it means _"the change of $y$ with respect to $x$"_ instead of looking like what shouldn't be a ratio at first glance. – Cole Tobin May 16 '14 at 16:40

-

4@ColeJohnson: The derivative is the *limit* of $\frac{\Delta y}{\Delta x}$ as $\Delta x$ goes to zero. (The difference between $\Delta x$ and $dx$ is that the former is finite while the latter, insofar as it's meaningful, is infinitesimal.) – ruakh Nov 20 '14 at 06:04

-

1Up until you introduce differential operators @colejohnson. Then what is $\Delta y$, a change or a laplacian? :) – MickG Apr 20 '15 at 16:10

-

7Being totally pedantic, England stopped producing useful new math and science for less than 150 years - far too long to justify and a horrible dark age, but centuries plural is a stretch. – user121330 May 14 '15 at 17:11

-

2Old post, but.... I think the fact that the limit of a ratio is not a ratio (the derivative) is sooo much like the fact the limit of a rational sequence need not be rational. In fact, the analogy is perfect, but instead of rational numbers it's the limit of rational functions. – Squirtle Jul 23 '15 at 01:37

-

2Thank you for the amazing answer. I am very bad at maths and wanted to clarify one thing and I hope someone can help. You say `And because we cannot express this limit-of-a-quotient as a-quotient-of-the-limits (both numerator and denominator go to zero), then the derivative is not a quotient.` Why do we need to express the limit as a quotient of the limits to establish whether it is a quotient or not? – Luca Aug 16 '16 at 11:05

-

1To correct one of the comments above, (William Rowan) Hamilton was Irish, not English. – Boon Apr 10 '18 at 22:35

-

1When you're talking about non-standard analysis; are you talking about the use of the dual numbers? – user2662833 Oct 09 '18 at 04:57

-

@user2662833: I do not know what "use of dual numbers" is. I'm talking about the, ahem, standard meaning of "non-standard analysis", which is analysis with infinitesimals. See [Wikipedia](https://en.wikipedia.org/wiki/Non-standard_analysis). – Arturo Magidin Oct 09 '18 at 05:34

-

So I'm talking about [these beasts](https://en.wikipedia.org/wiki/Dual_number) definitely worth a read :D But its funny how similar these ideas are. – user2662833 Oct 09 '18 at 09:21

-

@user2662833: No, they aren't. Those dual numbers are to take into account nilpotent elements, to formalize some ideas from algebraic geometry, and for other algebraic notions. They do not play the role of infinitesimals in non-standard analysis, or anything anywhere near that. – Arturo Magidin Oct 09 '18 at 20:13

-

1I meant that they were similar in the sense that both are trying to extend the real numbers to include an infinitesimal quantity that follows a different set of rules (although I do agree that the rules are different). I don't know enough about non-standard analysis to say that the dual numbers should appear there :P That wasn't my point – user2662833 Oct 09 '18 at 22:24

-

-

4As a side note (which almost nobody will read, down here) in the last century or so, the situation has been reversed. High schools in several European countries (case in point, Italy) have been teaching calculus using exclusively prime / Newton notation, **not even introducing** Leibniz notation. This is causing *generations* of us to struggle way more than we should, what with Newton's notation being more cumbersome and less intuitive to work with, and with us not being able to read mathematical websites and scientific papers right out of high school, as they universally use Leibniz notation. – Tobia Apr 08 '19 at 11:13

-

In what kind of computations (if anyone could give an example) would one run into ambiguities if one uses them as fractions and not as a limit-of-the-quotients? – aneet kumar Dec 24 '20 at 12:54

-

Can you clarify that statement "But if they are two different points on the graph, then it's not a tangent, and if it's just one point, then you can't define the line because you just have one point. " – Nick Feb 28 '21 at 01:35

-

@Nick: Sorry, but what is there to clarify? The tangent can’t intersect the graph at to different points like that, and if you only have one point, you can’t define a line: you need either two points, or you need a point and a slope. A point by itself doesn’t describe a line. – Arturo Magidin Feb 28 '21 at 01:52

-

I think I'm confused with the definition of the tangent you're using. On Wiki the tangent is defined a line between infinitely close points. You say "But if they are two different points on the graph, then it's not a tangent". Can you clarify? I'm trying to understand the problem with Leibniz theory and the tangent. – Nick Feb 28 '21 at 02:27

-

@Nick: The tangent is the line that affords the best linear approximation near the point; see [here](https://math.stackexchange.com/questions/12287/approaching-to-zero-but-not-equal-to-zero-then-why-do-the-points-get-overlappe) and elsewhere. For reasonable enough functions, line through two distinct nearby points (when the graph is not a straightline) is not the tangent, because the line that goes through the point $a$ and the point that is halfway between $a$ and that second point will be a better approximation.(cont) – Arturo Magidin Feb 28 '21 at 02:35

-

4@Nick: I strongly discourage you and strongly advice you not to take informal definitions from “wiki”, and to stick to a single source. The kind of mix-and-match approach you are using is only going to lead to more confusion, because you are looking different approaches with different definitions and different notions and trying to mesh them into a single coherent narrative; it won’t work. It would be like trying to create a novel by picking pages out of different books and stitching them together. – Arturo Magidin Feb 28 '21 at 02:37

-

A good example of why it cannot be treated as a ratio is given here: https://arxiv.org/pdf/1801.09553.pdf According to the authors, problems happen mostly when extending to the second derivative. They give as an example the case of $y$ depending on $x$, itself depending on $t$, and computing by the chain rule the second derivative of $y$ according to $t$, which gives $$\frac{d^2 y}{dt^2}=\frac{d^2 y}{dx^2}(\frac{dx}{dt})^2+\frac{dy}{dx} \frac{d^2 x}{dt^2}$$ and not $$\frac{d^2 y}{dt^2}=\frac{d^2y}{dx^2} (\frac{dx^2}{dt^2})=\frac{d^2 y}{dx^2} (\frac{dx}{dt})^2.$$ – The Quark Jul 06 '21 at 16:08

-

There is another way to construct this using dual numbers, is better to think that numbers and operations are not necessarily a closure operation. So, sometimes, an operation could not create a number. If it is necessary like complex numbers, you will need another dimension to represent this "not number". Dual numbers are a better approach to this because you can define infinitesimals in an abstract way like imaginary numbers and treat the subject in an analytic way like the complex analysis. In the and math is not about what is, but what you can do. – Guilherme Namen May 25 '22 at 11:28

Just to add some variety to the list of answers, I'm going to go against the grain here and say that you can, in an albeit silly way, interpret $dy/dx$ as a ratio of real numbers.

For every (differentiable) function $f$, we can define a function $df(x; dx)$ of two real variables $x$ and $dx$ via $$df(x; dx) = f'(x)\,dx.$$ Here, $dx$ is just a real number, and no more. (In particular, it is not a differential 1-form, nor an infinitesimal.) So, when $dx \neq 0$, we can write: $$\frac{df(x;dx)}{dx} = f'(x).$$

All of this, however, should come with a few remarks.

It is clear that these notations above do not constitute a definition of the derivative of $f$. Indeed, we needed to know what the derivative $f'$ meant before defining the function $df$. So in some sense, it's just a clever choice of notation.

But if it's just a trick of notation, why do I mention it at all? The reason is that in higher dimensions, the function $df(x;dx)$ actually becomes the focus of study, in part because it contains information about all the partial derivatives.

To be more concrete, for multivariable functions $f\colon R^n \to R$, we can define a function $df(x;dx)$ of two n-dimensional variables $x, dx \in R^n$ via $$df(x;dx) = df(x_1,\ldots,x_n; dx_1, \ldots, dx_n) = \frac{\partial f}{\partial x_1}dx_1 + \ldots + \frac{\partial f}{\partial x_n}dx_n.$$

Notice that this map $df$ is linear in the variable $dx$. That is, we can write: $$df(x;dx) = (\frac{\partial f}{\partial x_1}, \ldots, \frac{\partial f}{\partial x_n}) \begin{pmatrix} dx_1 \\ \vdots \\ dx_n \\ \end{pmatrix} = A(dx),$$ where $A$ is the $1\times n$ row matrix of partial derivatives.

In other words, the function $df(x;dx)$ can be thought of as a linear function of $dx$, whose matrix has variable coefficients (depending on $x$).

So for the $1$-dimensional case, what is really going on is a trick of dimension. That is, we have the variable $1\times1$ matrix ($f'(x)$) acting on the vector $dx \in R^1$ -- and it just so happens that vectors in $R^1$ can be identified with scalars, and so can be divided.

Finally, I should mention that, as long as we are thinking of $dx$ as a real number, mathematicians multiply and divide by $dx$ all the time -- it's just that they'll usually use another notation. The letter "$h$" is often used in this context, so we usually write $$f'(x) = \lim_{h \to 0} \frac{f(x+h) - f(x)}{h},$$ rather than, say, $$f'(x) = \lim_{dx \to 0} \frac{f(x+dx) - f(x)}{dx}.$$ My guess is that the main aversion to writing $dx$ is that it conflicts with our notation for differential $1$-forms.

EDIT: Just to be even more technical, and at the risk of being confusing to some, we really shouldn't even be regarding $dx$ as an element of $R^n$, but rather as an element of the tangent space $T_xR^n$. Again, it just so happens that we have a canonical identification between $T_xR^n$ and $R^n$ which makes all of the above okay, but I like distinction between tangent space and euclidean space because it highlights the different roles played by $x \in R^n$ and $dx \in T_xR^n$.

- 215,929

- 14

- 263

- 474

- 29,652

- 7

- 90

- 147

-

18Cotangent space. Also, in case of multiple variables if you fix $dx^2,\ldots,dx^n = 0$ you can still divide by $dx^1$ and get the derivative. And nothing stops you from defining differential first and then defining derivatives as its coefficients. – Alexei Averchenko Feb 11 '11 at 02:30

-

@Alexei Averchenko: Ah, I hadn't thought of setting $dx^2 = \ldots = dx^n = 0$, that's interesting. – Jesse Madnick Feb 11 '11 at 05:35

-

@Alexei: However, I do think I mean tangent space and not cotangent. I'm making reference to the pushforward map $f_*\colon T_xR^n \to T_{f(x)}R$. In other words, there's an additional conflict of notation between tangent vectors and differential 1-forms. – Jesse Madnick Feb 11 '11 at 05:37

-

3Well, canonically differentials are members of contangent bundle, and $dx$ is in this case its basis. – Alexei Averchenko Feb 11 '11 at 05:54

-

4Maybe I'm misunderstanding you, but I never made any reference to differentials in my post. My point is that $df(x;dx)$ can be likened to the pushforward map $f_*$. Of course one can also make an analogy with the actual differential 1-form $df$, but that's something separate. – Jesse Madnick Feb 11 '11 at 06:18

-

My point is that when people talk about a linearization of a map from $R^n \to R$, the input of this linear map is tangent vectors, even though the notation is often (unfortunately) $dx$. – Jesse Madnick Feb 11 '11 at 06:20

-

6Sure the input is a vector, that's why these linearizations are called covectors, which are members of cotangent space. I can't see why you are bringing up pushforwards when there is a better description right there. – Alexei Averchenko Feb 11 '11 at 09:12

-

I see. Normally when I think of the linearization of a smooth map $f\colon M \to N$ between manifolds, I think of the pushforward $f_*$. I also think this nicely generalizes the notion of the so-called "total derivative" of maps $R^m \to R^n$. I suppose one can also use vector-valued differential forms for this purpose... perhaps it's just a matter of personal taste. – Jesse Madnick Feb 11 '11 at 16:11

-

4I just don't think pushforward is the best way to view differential of a function with codomain $\mathbb{R}$ (although it is perfectly correct), it's just a too complex idea that has more natural treatment. – Alexei Averchenko Feb 12 '11 at 04:25

-

2Out of curiosity, and I know this is an old post, but how is this different from taking the exterior derivative of $f$, in which case the $dx_i$ are actually differential one forms? – jgon May 08 '15 at 19:16

My favorite "counterexample" to the derivative acting like a ratio: the implicit differentiation formula for two variables. We have $$\frac{dy}{dx} = -\frac{\partial F/\partial x}{\partial F/\partial y} $$

The formula is almost what you would expect, except for that pesky minus sign.

See Implicit differentiation for the rigorous definition of this formula.

-

29Yes, but their is a fake proof of this that comes from that kind a reasoning. If $f(x,y)$ is a function of two variables, then $df=\frac{\partial f}{\partial x}dx+\frac{\partial f}{\partial y}$. Now if we pick a level curve $f(x,y)=0$, then $df=0$, so solving for $\frac{dy}{dx}$ gives us the expression above. – Baby Dragon Jun 16 '13 at 19:03

-

8

-

23@Lurco: He meant $df = \frac{∂f}{∂x} dx + \frac{∂f}{∂y} dy$, where those 'infinitesimals' are not proper numbers and hence it is wrong to simply substitute $df=0$, because in fact if we are consistent in our interpretation $df=0$ would imply $dx=dy=0$ and hence we can't get $\frac{dy}{dx}$ anyway. But if we are **inconsistent**, we can ignore that and proceed to get the desired expression. Correct answer but fake proof. – user21820 May 13 '14 at 09:58

-

13I agree that this is a good example to show why such notation is not so simple as one might think, but in this case I could say that $dx$ is not the same as $∂x$. Do you have an example where the terms really cancel to give the wrong answer? – user21820 May 13 '14 at 10:07

-

I don't know. Assume dependence on a third dummy variable with respect to which everything is constant so that the lhs is really a partial? – asmeurer May 13 '14 at 17:45

-

3-1 I'm sorry, but this is based on a misapprehension. The dy/dx is for the the goemetric entities which are the level curves, the partial derivatives are for the function we are taking level curves from. This doesn't demonstrate what it is claimed to as we are using a different entity on the left than on the right. – John Robertson May 18 '15 at 04:25

-

5@JohnRobertson of course it is. That's the whole point here, that this sort of "fake proof" thinking leads to wrong results here. I blatantly ignore the fact that $d \neq \partial$ (essentially), and I also completely ignore what $F$ really is. My only point here is that if you try to use this as a mnemonic (or worse, a "proof" method), you will get completely wrong results. – asmeurer May 19 '15 at 18:58

-

1But it gives the wrong idea, because dx and dy are perfectly well defined 1-forms (they act on tangent vectors, not points). And their ratio is constant on fibers (i.e. it depends only on the base point of the vector) and so it descends to a well defined function which is exactly f'(x). – John Robertson May 19 '15 at 19:04

-

1Hello again! I have thought about this issue recently, so when I came across your answer again I see that it is merely a good example that blindly doing things will lead to nonsense (hopefully a well-known fact!) but a poor counter-example of being able to treat $\frac{dy}{dx}$ as notation for approximation of $\frac{Δy}{Δx}$. The reason is that this actually works well and can be made rigorous via an intuitive interface of asymptotic behaviour that does not actually invoke epsilon-delta definitions. Of course if one wants to prove that interface we still need the modern definitions. – user21820 Aug 07 '15 at 08:30

-

1I just wanted to add that the correct interpretation of the implicit function theorem above is that, holding the function constant at zero, the derivative shows how much y changes when x changes an infinitely small amount. It can be read as "How much of X would replace Y". Thus the above proof relies on an assumption and is not a proof at all. The complete formula is actually: $$\frac{dy}{dx} = -\frac {\delta F /\delta x}{\delta F / \delta y} +\frac{dF }{dx }\frac{\delta y}{\delta F}$$ – Dole Jun 26 '16 at 07:47

-

2Just as a note, The link below has a discussion showing how this is a result of faulty notation, not because it isn't a ratio. In short, the two $\partial F$s in $\frac{\partial F}{\partial x}$ and $\frac{\partial F}{\partial y}$ do not refer to the same thing. If you separate them, giving them a subscript or something, then the algebra works out perfectly. However, $\partial x$ and $\partial y$ are not equal to $dx$ and $dy$ in all circumstances, either (though they are here). https://journals.blythinstitute.org/ojs/index.php/cbi/article/view/47/46 – johnnyb Oct 15 '19 at 23:44

It is best to think of $\frac{d}{dx}$ as an operator which takes the derivative, with respect to $x$, of whatever expression follows.

- 7,933

- 11

- 42

- 81

- 2,223

- 1

- 14

- 19

-

46

-

61What kind of justification do you want? It is a very good argument for telling that $\frac{dy}{dx}$ is not a fraction! This tells us that $\frac{dy}{dx}$ needs to be seen as $\frac{d}{dx}(y)$ where $\frac{d}{dx}$ is an opeartor. – Emo May 02 '14 at 20:43

-

32Over the hyperreals, $\frac{dy}{dx}$ is a ratio *and* one can view $\frac{d}{dx}$ as an operator. Therefore Tobin's reply is *not* a good argument for "telling that dy/dx is not a fraction". – Mikhail Katz Dec 15 '14 at 16:11

-

16This doesn't address the question. $\frac{y}{x}$ is clearly a ratio, but it can be also thought as the operator $\frac{1}{x}$ acting on $y$ by multiplication, so "operator" and "ratio" are not exclusive. – mlainz Jan 29 '19 at 10:52

-

6Thanks for the comments - I think all of these criticisms of my answer are valid. – Tobin Fricke Jan 30 '19 at 19:38

-

1yes d is not multiplied by y, but when you take $d(y)$ you get $dy$, which in turn is proportional to $y^{\prime}$ and $dx$. If you could just "multiply" $d$ by $y$ to get $dy$, then you could do the same for $x$, and get $\frac{dy}{dx}=\frac{y}{x}$ which is why d(y)≠d⋅y. What I said before that is how you translate $\frac{d}{dx}(y)=\frac{dy}{dx}$ where they both agree. What @MikhailKatz said. – cmarangu Oct 13 '19 at 03:08

-

1@TobinFricke my professor refers to such operators as coffee machines; without the coffee beans (y for instance), we would have no coffee (derivative of y with respect to x) – Omar Shaaban Jun 17 '20 at 11:16

-

I would like to think of $\frac{d}{dx}$ as an operator. But then what is it when I find a $dy$ on its own in the wild? ("In the wild" means "In some proof/paper/website/formula...") – lucidbrot Jul 12 '21 at 14:20

In Leibniz's mathematics, if $y=x^2$ then $\frac{dy}{dx}$ would be "equal" to $2x$, but the meaning of "equality" to Leibniz was not the same as it is to us. He emphasized repeatedly (for example in his 1695 response to Nieuwentijt) that he was working with a generalized notion of equality "up to" a negligible term. Also, Leibniz used several different pieces of notation for "equality". One of them was the symbol "$\,{}_{\ulcorner\!\urcorner}\,$". To emphasize the point, one could write $$y=x^2\quad \rightarrow \quad \frac{dy}{dx}\,{}_{\ulcorner\!\urcorner}\,2x$$ where $\frac{dy}{dx}$ is literally a ratio. When one expresses Leibniz's insight in this fashion, one is less tempted to commit an ahistorical error of accusing him of having committed a logical inaccuracy.

In more detail, $\frac{dy}{dx}$ is a true ratio in the following sense. We choose an infinitesimal $\Delta x$, and consider the corresponding $y$-increment $\Delta y = f(x+\Delta x)-f(x)$. The ratio $\frac{\Delta y}{\Delta x}$ is then infinitely close to the derivative $f'(x)$. We then set $dx=\Delta x$ and $dy=f'(x)dx$ so that $f'(x)=\frac{dy}{dx}$ by definition. One of the advantages of this approach is that one obtains an elegant proof of chain rule $\frac{dy}{dx}=\frac{dy}{du}\frac{du}{dx}$ by applying the standard part function to the equality $\frac{\Delta y}{\Delta x}=\frac{\Delta y}{\Delta u}\frac{\Delta u}{\Delta x}$.

In the real-based approach to the calculus, there are no infinitesimals and therefore it is impossible to interpret $\frac{dy}{dx}$ as a true ratio. Therefore claims to that effect have to be relativized modulo anti-infinitesimal foundational commitments.

Note 1. I recently noticed that Leibniz's $\,{}_{\ulcorner\!\urcorner}\,$ notation occurs several times in Margaret Baron's book The origins of infinitesimal calculus, starting on page 282. It's well worth a look.

Note 2. It should be clear that Leibniz did view $\frac{dy}{dx}$ as a ratio. (Some of the other answers seem to be worded ambiguously with regard to this point.)

- 35,814

- 3

- 58

- 116

-

1This is somewhat beside the point, but I don't think that applying the standard part function to prove the Chain Rule is particularly more (or less) elegant than applying the limit as $\Delta{x} \to 0$. Both attempts hit a snag since $\Delta{u}$ might be $0$ when $\Delta{x}$ is not (regardless of whether one is thinking of $\Delta{x}$ as an infinitesimal quantity or as a standard variable approaching $0$), as for example when $u = x \sin(1/x)$. – Toby Bartels Feb 21 '18 at 23:26

-

1This snag does exist in the epsilon-delta setting, but it does not exist in the infinitesimal setting because if the derivative is nonzero then one necessarily has $\Delta u\not=0$, and if the derivative is zero then there is nothing to prove. @TobyBartels – Mikhail Katz Feb 22 '18 at 09:47

-

1Notice that the function you mentioned is undefined (or not differentiable if you define it) at zero, so chain rule does not apply in this case anyway. @TobyBartels – Mikhail Katz Feb 22 '18 at 10:15

-

1Sorry, that should be $u = x^2 \sin(1/x)$ (extended by continuity to $x = 0$, which is the argument at issue). If the infinitesimal $\Delta{x}$ is $1/(n\pi)$ for some (necessarily infinite) hyperinteger $n$, then $\Delta{u}$ is $0$. It's true that in this case, the derivative $\mathrm{d}u/\mathrm{d}x$ is $0$ too, but I don't see why that matters; why is there nothing to prove in that case? (Conversely, if there's nothing to prove in that case, then doesn't that save the epsilontic proof as well? That's the only way that $\Delta{u}$ can be $0$ arbitrarily close to the argument.) – Toby Bartels Feb 23 '18 at 12:21

-

1If $\Delta u$ is zero then obviously $\Delta y$ is also zero and therefore both sides of the formula for chain rule are zero. On the other hand, if the derivative of $u=g(x)$ is nonzero then $\Delta u$ is necessarily nonzero. This is not necessarily the case when one works with finite differences. @TobyBartels – Mikhail Katz Feb 24 '18 at 19:28

-

1OK, I think that I see it now! Given a nonzero infinitesimal value of $\Delta{x}$ (and calculating $\Delta{u}$ and $\Delta{y}$ from it), we need to prove that $\Delta{y}/Delta{x}$ is infinitely close to $f'(u) g'(x)$. You're saying, treat $\Delta{u} = 0$ and $\Delta{u} \ne 0$ separately: in the latter case, apply standard parts to $\Delta{y}/\Delta{x} = \Delta{y}/\Delta{u} \cdot \Delta{u}/\Delta{x}$; in the former case, $g'(u) = 0$, $\Delta{y} = 0$ too, and $\operatorname{st}(0) = 0$ so it's trivial. This argument would be difficult to adapt to epsilontics. – Toby Bartels Feb 26 '18 at 23:25

-

1I've just spent the last half hour searching for a review that I was sure that I'd seen of Keisler's nonstandard Calculus textbook (I had thought that it was on MathSciNet but it doesn't seem to be), in which the reviewer said that the book proved the Chain Rule easily by cancelling factors; but when I looked in the book (and the instructor's companion), the proof involved the Increment Theorem, much like the standard proof in some standard textbooks. So when you said that it was easy, I thought that you must be making the same mistake! – Toby Bartels Feb 26 '18 at 23:57

-

1@TobyBartels, the proof via the increment theorem in Keisler is simpler because the last stage is simply applying ***st***. Also, this can be proved via the $\Delta u$'s by considering two cases: when $\Delta u$ is zero and nonzero. – Mikhail Katz Mar 20 '18 at 11:35

Typically, the $\frac{dy}{dx}$ notation is used to denote the derivative, which is defined as the limit we all know and love (see Arturo Magidin's answer). However, when working with differentials, one can interpret $\frac{dy}{dx}$ as a genuine ratio of two fixed quantities.

Draw a graph of some smooth function $f$ and its tangent line at $x=a$. Starting from the point $(a, f(a))$, move $dx$ units right along the tangent line (not along the graph of $f$). Let $dy$ be the corresponding change in $y$.

So, we moved $dx$ units right, $dy$ units up, and stayed on the tangent line. Therefore the slope of the tangent line is exactly $\frac{dy}{dx}$. However, the slope of the tangent at $x=a$ is also given by $f'(a)$, hence the equation

$$\frac{dy}{dx} = f'(a)$$

holds when $dy$ and $dx$ are interpreted as fixed, finite changes in the two variables $x$ and $y$. In this context, we are not taking a limit on the left hand side of this equation, and $\frac{dy}{dx}$ is a genuine ratio of two fixed quantities. This is why we can then write $dy = f'(a) dx$.

- 4,257

- 1

- 39

- 48

- 1,412

- 13

- 11

-

8This sounds a lot like the explanation of differentials that I recall hearing from my Calculus I instructor (an analyst of note, an expert on Wiener integrals): "$dy$ and $dx$ are any two numbers whose ratio is the derivative . . . they are useful for people who are interested in (sniff) approximations." – bof Dec 28 '13 at 03:34

-

1@bof: But we can't describe almost every real number in the real world, so I guess having approximations is quite good. =) – user21820 May 13 '14 at 10:13

-

1@user21820 anything that we can approximate to arbitrary precision we can define... It's the result of that algorithm. – k_g May 18 '15 at 01:34

-

3@k_g: Yes of course. My comment was last year so I don't remember what I meant at that time anymore, but I probably was trying to say that since we already are limited to countably many definable reals, it's much worse if we limit ourselves even further to closed forms of some kind and eschew approximations. Even more so, in the real world we rarely have exact values but just confidence intervals anyway, and so approximations are sufficient for almost all practical purposes. – user21820 May 18 '15 at 05:04

Of course it is a ratio.

$dy$ and $dx$ are differentials. Thus they act on tangent vectors, not on points. That is, they are functions on the tangent manifold that are linear on each fiber. On the tangent manifold the ratio of the two differentials $\frac{dy}{dx}$ is just a ratio of two functions and is constant on every fiber (except being ill defined on the zero section) Therefore it descends to a well defined function on the base manifold. We refer to that function as the derivative.

As pointed out in the original question many calculus one books these days even try to define differentials loosely and at least informally point out that for differentials $dy = f'(x) dx$ (Note that both sides of this equation act on vectors, not on points). Both $dy$ and $dx$ are perfectly well defined functions on vectors and their ratio is therefore a perfectly meaningful function on vectors. Since it is constant on fibers (minus the zero section), then that well defined ratio descends to a function on the original space.

At worst one could object that the ratio $\frac{dy}{dx}$ is not defined on the zero section.

- 2,828

- 4

- 20

- 41

- 1,212

- 9

- 10

-

4Can anything meaningful be made of higher order derivatives in the same way? – Francis Davey Mar 08 '15 at 10:41

-

2You can simply mimic the procedure to get a second or third derivative. As I recall when I worked that out the same higher partial derivatives get realized in it multiple ways which is awkward. The standard approach is more direct. It is called Jets and there is currently a Wikipedia article on Jet (mathematics). – John Robertson May 02 '15 at 16:51

-

@JohnRobertson What does it mean that "$\frac{dy}{dx}$ descends to a well defined function"? Tangent manifold is is the tangent bundle, right? I'm not very familiar with this terminology, so if you could just direct me to an article establishing these concepts I would be much obliged. – Lurco Jul 06 '15 at 13:00

-

3Tangent manifold is the tangent bundle. And what it means is that dy and dx are both perfectly well defined functions on the tangent manifold, so we can divide one by the other giving dy/dx. It turns out that the value of dy/dx on a given tangent vector only depends on the base point of that vector. As its value only depends on the base point, we can take dy/dx as really defining a function on original space. By way of analogy, if f(u,v) = 3*u + sin(u) + 7 then even though f is a function of both u and v, since v doesn't affect the output, we can also consider f to be a function of u alone. – John Robertson Jul 06 '15 at 15:53

-

4Your answer is in the opposition with many other answers here! :) I am confused! So is it a ratio or not or both!? – Hosein Rahnama Jul 15 '16 at 20:24

-

1@H.R. It is a ratio. It is a ratio of differentials. dy is a real thing. It is a differential on the tangent manifold. So is dx. If I divide dy by dx I get a function, which is f'(x). In fact dy is the differential operator d applied to the function y and dx is the differential operator d applied to the function x. If I apply the differential operator d to a function f(x) then the result is f'(x) dx which is the function f'(x) times the differential dx. – John Robertson Oct 17 '16 at 00:13

-

Ah! Thanks for answering my old comment! :) So we do not have to go inside non-standard analysis or something to make $dy$ and $dx$ meaningful? So what is the last part of *Arturo Magidin*'s answer about? I think that reading your answer may put future readers into this dilemma so it will be helpful to address this in your answer. :) – Hosein Rahnama Oct 17 '16 at 05:30

-

-

3How do you simplify all this to the more special-case level of basic calculus where all spaces are Euclidean? The invocations of manifold theory suggest this is an approach that is designed for _non_-Euclidean geometries. – The_Sympathizer Feb 03 '17 at 07:19

-

@H.R. maybe "within" the subject of calculus or even analysis they are not meaingful. But maybe within the subject of geometry there are definitions and theorems for which these ideas work. And the ideas of all this is so connected that it all looks like the same things. But that just a guess on my part. What do you think, now two years later? – user123124 Oct 21 '18 at 14:56

The notation $dy/dx$ - in elementary calculus - is simply that: notation to denote the derivative of, in this case, $y$ w.r.t. $x$. (In this case $f'(x)$ is another notation to express essentially the same thing, i.e. $df(x)/dx$ where $f(x)$ signifies the function $f$ w.r.t. the dependent variable $x$. According to what you've written above, $f(x)$ is the function which takes values in the target space $y$).

Furthermore, by definition, $dy/dx$ at a specific point $x_0$ within the domain $x$ is the real number $L$, if it exists. Otherwise, if no such number exists, then the function $f(x)$ does not have a derivative at the point in question, (i.e. in our case $x_0$).

For further information you can read the Wikipedia article: http://en.wikipedia.org/wiki/Derivative

- 811

- 6

- 7

-

35So glad that wikipedia finally added an entry for the derivative... $$$$ – The Chaz 2.0 Aug 10 '11 at 17:50

$\boldsymbol{\dfrac{dy}{dx}}$ is definitely not a ratio - it is the limit (if it exists) of a ratio. This is Leibniz's notation of the derivative (c. 1670) which prevailed to the one of Newton $\dot{y}(x)$.

Still, most Engineers and even many Applied Mathematicians treat it as a ratio. A very common such case is when solving separable ODEs, i.e. equations of the form $$ \frac{dy}{dx}=f(x)g(y), $$ writing the above as $$f(x)\,dx=\frac{dy}{g(y)}, $$ and then integrating.

Apparently this is not Mathematics, it is a symbolic calculus.

Why are we allowed to integrate the left hand side with respect to to $x$ and the right hand side with respect to to $y$? What is the meaning of that?

This procedure often leads to the right solution, but not always. For example applying this method to the IVP $$ \frac{dy}{dx}=y+1, \quad y(0)=-1,\qquad (\star) $$ we get, for some constant $c$, $$ \ln (y+1)=\int\frac{dy}{y+1} = \int dx = x+c, $$ equivalently $$ y(x)=\mathrm{e}^{x+c}-1. $$ Note that it is impossible to incorporate the initial condition $y(0)=-1$, as $\mathrm{e}^{x+c}$ never vanishes. By the way, the solution of $(\star)$ is $y(x)\equiv -1$.

Even worse, consider the case of the IVP $$ y'=\frac{3y^{1/3}}{2}, \quad y(0)=0. \tag{$\star\star$} $$ This IVP does not enjoy uniqueness. It possesses infinitely many solutions. Nevertheless, using this symbolic calculus, we obtain that $y^{2/3}=x$, which is one of the infinitely many solutions of $(\star\star)$. Another one is $y\equiv 0$.

In my opinion, Calculus should be taught rigorously, with $\delta$'s and $\varepsilon$'s. Once these are well understood, then one can use such symbolic calculus, provided that he/she is convinced under which restrictions it is indeed permitted.

- 78,494

- 15

- 113

- 210

-

13I would disagree with this to some extent with your example as many would write the solution as $y(x)=e^{x+c}\rightarrow y(x)=e^Ce^x\rightarrow y(x)=Ce^x$ for the 'appropriate' $C$. Then we have $y(0)=Ce-1=-1$, implying $C=0$ avoiding the issue which is how many introductory D.E. students would answer the question so the issue is never noticed. But yes, $\frac{dy}{dx}$ is certainly not a ratio. – mathematics2x2life Dec 20 '13 at 21:11

-

18Your example works if $dy/dx$ is handled naively as a quotient. Given $dy/dx = y+1$, we can deduce $dx = dy/(y+1)$, but as even undergraduates know, you can't divide by zero, so this is true only as long as $y+1 \ne 0$. Thus we correctly conclude that $(\star)$ has no solution such that $y+1 \ne 0$. Solving for $y+1=0$, we have $dy/dx = 0$, so $y = \int 0 dx = 0 + C$, and $y(0)=-1$ constraints $C=-1$. – Gilles 'SO- stop being evil' Aug 28 '14 at 14:47

-

2Since you mention Leibniz, it may be helpful to clarify that Leibniz did view $\frac{dy}{dx}$ as a ratio, for the sake of historical accuracy. – Mikhail Katz Dec 07 '15 at 18:59

-

+1 for the interesting IVP example, I have never noticed that subtlety. – electronpusher Apr 22 '17 at 03:04

-

6You got the wrong answer because you divided by zero, not because there's anything wrong with treating the derivative as a ratio. – Toby Bartels Apr 29 '17 at 04:20

-

1

It is not a ratio, just as $dx$ is not a product.

- 126,294

- 9

- 223

- 348

-

8I wonder what motivated the downvote. I do find strange that students tend to confuse Leibniz's notation with a quotient, and not $dx$ (or even $\log$!) with a product: they are both indivisible notations... My answer above just makes this point. – Mariano Suárez-Álvarez Feb 10 '11 at 00:12

-

11I think that the reason why this confusion arises in some students may be related to the way in which this notation is used for instance when calculating integrals. Even though as you say, they are indivisible, they are separated "formally" in any calculus course in order to aid in the computation of integrals. I suppose that if the letters in $\log$ where separated in a similar way, the students would probably make the same mistake of assuming it is a product. – Adrián Barquero Feb 10 '11 at 04:09

-

6I once heard a story of a university applicant, who was asked at interview to find $dy/dx$, didn't understand the question, no matter how the interviewer phrased it. It was only after the interview wrote it out that the student promptly informed the interviewer that the two $d$'s cancelled and he was in fact mistaken. – jClark94 Jan 30 '12 at 19:54

-

8

-

@AndréCaldas, it is a statement of a fact. You can choose not to «be imposed» of it, just like you can refuse to accept to «be imposed» of the fact that if you jump of a 10th floor window you will die from the crash on the ground. – Mariano Suárez-Álvarez Sep 12 '13 at 16:14

-

4I was under the impression that answers were supposed to be useful. A statement of a fact might be useful for the OP or others visiting the page. I don't have to "choose" to be or not be imposed... there is in fact another option: not writing useless answers. Remember the main reason we are here is not that you feel so superior and full of yourself. Be useful!!! Cheers... – André Caldas Sep 13 '13 at 16:07

-

The question that was asked here is: «is $dy/dx$ not a ratio?» If you read my answer, you will notice that it very succinctly answers the question, and even includes an example of a similar notation which could also possibly cause the same sort of confusion. If you feel you can answer more usefully this question, then by all means add another answer: whatever it is that you are doing here is not being useful to anyone. – Mariano Suárez-Álvarez Sep 13 '13 at 19:28

-

I would appreciate it if you would avoid conjecturing about my motivations for answering in one way or another: at the very least, do not do it here, where it constitutes *noise*. – Mariano Suárez-Álvarez Sep 13 '13 at 19:32

-

20I find the statement that "students tend to confuse Leibniz's notation with a quotient" a bit problematic. The reason for this is that Leibniz certainly thought of $\frac{dy}{dx}$ as a quotient. Since it behaves as a ratio in many contexts (such as the chain rule), it may be more helpful to the student to point out that in fact the derivative can be said to be "equal" to the ratio $\frac{dy}{dx}$ if "equality" is interpreted as a more general relation of equality "up to an infinitesimal term", which is how Leibniz thought of it. I don't think this is comparable to thinking of "dx" as a product – Mikhail Katz Oct 02 '13 at 12:57

-

1user72694: yes, and $\frac{dx}{dy}$ becomes *really* confusing, for example, when the student learns about ODEs with separable variables: $y\;\frac{dy}{dx}=g(x)$< so "multiply both sides by dx" and you get $ydy=g(x)dx$, so you only need to -- that part is reeealy confusing -- "integrate", as in "formally put the integral sign" on both sides. And many students doing this usually do not think of $dx$ or $dy$ as a differential. They do it automatically, not thinking about what $dx$ and $dy$ are. – Jay Jun 19 '14 at 04:06

-

"...just as $dx$ is not a product." You can get $dx$ by applying the $d$ operator on the $x$ differential form, and as such $dx$ is a group operation, so I think you have the right to call it a product. – Dave Jul 26 '14 at 08:25

-

1dy/dx is a ratio. It is a ratio of differentials. A differential is a function on vectors, not a function on points. – John Robertson Jul 08 '15 at 17:15

-

1I don't think the confusion is coming from the line between dy and dx. – JobHunter69 Nov 05 '17 at 04:22

In most formulations, $\frac{dx}{dy}$ can not be interpreted as a ratio, as $dx$ and $dy$ do not actually exist in them. An exception to this is shown in this book. How it works, as Arturo said, is we allow infinitesimals (by using the hyperreal number system). It is well formulated, and I prefer it to limit notions, as this is how it was invented. Its just that they weren't able to formulate it correctly back then. I will give a slightly simplified example. Let us say you are differentiating $y=x^2$. Now let $dx$ be a miscellaneous infinitesimals (it is the same no matter which you choose if your function is differentiate-able at that point.) $$dy=(x+dx)^2-x^2$$ $$dy=2x\times dx+dx^2$$ Now when we take the ratio, it is: $$\frac{dy}{dx}=2x+dx$$

(Note: Actually,$\frac{\Delta y}{\Delta x}$ is what we found in the beginning, and $dy$ is defined so that $\frac{dy}{dx}$ is $\frac{\Delta y}{\Delta x}$ rounded to the nearest real number.)

- 9,530

- 3

- 34

- 98

-

So, your example is still incomplete. To complete it, you should either take the limit of $dx\to0$, or take standard part of the RHS if you treat $dx$ as infinitesimal instead of as $\varepsilon$. – Ruslan May 08 '18 at 19:49

-

$\frac{dy}{dx}$ is not a ratio - it is a symbol used to represent a limit.

- 441

- 4

- 2

-

8This is one possible view on $\frac{dy}{dx}$, related to the fact that the common number system does not contain infinitesimals, making it impossible to justify this symbol as a ratio in that particular framework. However, Leibniz certainly meant it to be a ratio. Furthermore, it can be justified as a ratio in modern infinitesimal theories, as mentioned in some of the other answers. – Mikhail Katz Nov 17 '13 at 14:57

I realize this is an old post, but I think it's worth while to point out that in the so-called Quantum Calculus $\frac{dy}{dx}$ $is$ a ratio. The subject $starts$ off immediately by saying this is a ratio, by defining differentials and then calling derivatives a ratio of differentials:

The $q-$differential is defined as

$$d_q f(x) = f(qx) - f(x)$$

and the $h-$differential as $$d_h f(x) = f(x+h) - f(x)$$

It follows that $d_q x = (q-1)x$ and $d_h x = h$.

From here, we go on to define the $q-$derivative and $h-$derivative, respectively:

$$D_q f(x) = \frac{d_q f(x)}{d_q x} = \frac{f(qx) - f(x)}{(q-1)x}$$

$$D_h f(x) = \frac{d_h f(x)}{d_q x} = \frac{f(x+h) - f(x)}{h}$$

Notice that

$$\lim_{q \to 1} D_q f(x) = \lim_{h\to 0} D_h f(x) = \frac{df(x)}{x} \neq \text{a ratio}$$

- 6,348

- 28

- 57

-

I just want to point out that @Yiorgos S. Smyrlis did already state that dy/dx is not a ratio, but a limit of a ratio (if it exists). I only included my response because this subject seems interesting (I don't think many have heard of it) and in this subject we work in the confines of it being a ratio... but certainly the limit is not really a ratio. – Squirtle Dec 28 '13 at 01:54

-

You start out saying that it is a ratio and then end up saying that it is not a ratio. It's interesting that you can define it as a limit of ratios in two different ways, but you've still only given it as a limit of ratios, not as a ratio directly. – Toby Bartels Apr 29 '17 at 04:40

-

2I guess you mean to say that the q-derivative and h-derivative are ratios; that the usual derivative may be recovered as limits of these is secondary to your point. – Toby Bartels May 02 '17 at 21:55

-

To ask "Is $\frac{dy}{dx}$ a ratio or isn't it?" is like asking "Is $\sqrt 2$ a number or isn't it?" The answer depends on what you mean by "number". $\sqrt 2$ is not an Integer or a Rational number, so if that's what you mean by "number", then the answer is "No, $\sqrt 2$ is not a number."

However, the Real numbers are an extension of the Rational numbers that includes irrational numbers such as $\sqrt 2$, and so, in this set of numbers, $\sqrt 2$ is a number.

In the same way, a differential such as $dx$ is not a Real number, but it is possible to extend the Real numbers to include infinitesimals, and, if you do that, then $\frac{dy}{dx}$ is truly a ratio.

When a professor tells you that $dx$ by itself is meaningless, or that $\frac{dy}{dx}$ is not a ratio, they are correct, in terms of "normal" number systems such as the Real or Complex systems, which are the number systems typically used in science, engineering and even mathematics. Infinitesimals can be placed on a rigorous footing, but sometimes at the cost of surrendering some important properties of the numbers we rely on for everyday science.

See https://en.wikipedia.org/wiki/Infinitesimal#Number_systems_that_include_infinitesimals for a discussion of number systems that include infinitesimals.

- 441

- 4

- 3

Assuming you're happy with $dy/dx$, when it becomes $\ldots dy$ and $\ldots dx$ it means that it follows that what precedes $dy$ in terms of $y$ is equal to what precedes $dx$ in terms of $x$.

"in terms of" = "with reference to".

That is, if "$a \frac{dy}{dx} = b$", then it follows that "$a$ with reference to $y$ = $b$ with reference to $x$". If the equation has all the terms with $y$ on the left and all with $x$ on the right, then you've got to a good place to continue.

The phrase "it follows that" means you haven't really moved $dx$ as in algebra. It now has a different meaning which is also true.

- 28,057

- 7

- 60

- 128

- 267

- 3

- 2

Anything that can be said in mathematics can be said in at least 3 different ways...all things about derivation/derivatives depend on the meaning that is attached to the word: TANGENT. It is agreed that the derivative is the "gradient function" for tangents (at a point); and spatially (geometrically) the gradient of a tangent is the "ratio" ( "fraction" would be better ) of the y-distance to the x-distance. Similar obscurities occur when "spatial and algebraic" are notationally confused.. some people take the word "vector" to mean a track!

- 19,382

- 10

- 39

- 120

- 621

- 7

- 4

-

2Accordingto John Robinson (2days ago) vectors... elements(points) of vector spaces are different from points – kozenko May 03 '14 at 03:43

There are many answers here, but the simplest seems to be missing. So here it is:

Yes, it is a ratio, for exactly the reason that you said in your question.

- 2,131

- 18

- 34

-

4Some other people have already more-or-less given this answer, but then go into more detail about how it fits into tangent spaces in higher dimensions and whatnot. That is all very interesting, of course, but it may give the impression that the development of the derivative as a ratio that appears in the original question is not enough by itself. But it *is* enough. – Toby Bartels Apr 29 '17 at 04:50

-

3Nonstandard analysis, while providing an interesting perspective and being closer to what Leibniz himself was thinking, is also not necessary for this. The definition of differential that is cited in the question is not infinitesimal, but it still makes the derivative into a ratio of differentials. – Toby Bartels Apr 29 '17 at 04:50

I am going to join @Jesse Madnick here, and try to interpret $\frac{dy}{dx}$ as a ratio. The idea is: lets interpret $dx$ and $dy$ as functions on $T\mathbb R^2$, as if they were differential forms. For each tangent vector $v$, set $dx(v):=v(x)$. If we identify $T\mathbb R^2$ with $\mathbb R^4$, we get that $(x,y,dx,dy)$ is just the canonical coordinate system for $\mathbb R^4$. If we exclude the points where $dx=0$, then $\frac{dy}{dx} = 2x$ is a perfectly healthy equation, its solutions form a subset of $\mathbb R^4$.

Let's see if it makes any sense. If we fix $x$ and $y$, the solutions form a straight line through the origin of the tangent space at $(x,y)$, its slope is $2x$. So, the set of all solutions is a distribution, and the integral manifolds happen to be the parabolas $y=x^2+c$. Exactly the solutions of the differential equation that we would write as $\frac{dy}{dx} = 2x$. Of course, we can write it as $dy = 2xdx$ as well. I think this is at least a little bit interesting. Any thoughts?

- 347

- 2

- 6

The derivate $\frac{dy}{dx}$ is not a ratio, but rather a representation of a ratio within a limit.

Similarly, $dx$ is a representation of $\Delta x$ inside a limit with interaction. This interaction can be in the form of multiplication, division, etc. with other things inside the same limit.

This interaction inside the limit is what makes the difference. You see, a limit of a ratio is not necessarily the ratio of the limits, and that is one example of why the interaction is considered to be inside the limit. This limit is hidden or left out in the shorthand notation that Liebniz invented.

The simple fact is that most of calculus is a shorthand representation of something else. This shorthand notation allows us to calculate things more quickly and it looks better than what it is actually representative of. The problem comes in when people expect this notation to act like actual maths, which it can't because it is just a representation of actual maths.

So, in order to see the underlying properties of calculus, we always have to convert it to the actual mathematical form and then analyze it from there. Then by memorization of basic properties and combinations of these different properties we can derive even more properties.

- 384

- 2

- 11

$dy/dx$ is quite possibly the most versatile piece of notation in mathematics. It can be interpreted as

- A shorthand for the limit of a quotient: $$ \frac{dy}{dx}=\lim_{\Delta x\to 0}\frac{\Delta y}{\Delta x} \, .$$

- The result of applying the derivative operator, $d/dx$, on a given expression $y$.

- The ratio of two infinitesimals $dy$ and $dx$ (with this interpretation made rigorous using non-standard analysis).

- The ratio of two differentials $dy$ and $dx$ acting along the tangent line to a given curve.

All of these interpretations are equally valid and useful in their own way. In interpretations (1) and (2), which are the most common, $dy/dx$ is not seen as a ratio, despite the fact that it often behaves as one. Interpretations (3) and (4) offer viable alternatives. Since Mikhail Katz has already given a good exposition of infinitesimals, let me focus the remainder of this answer on interpretation (4).

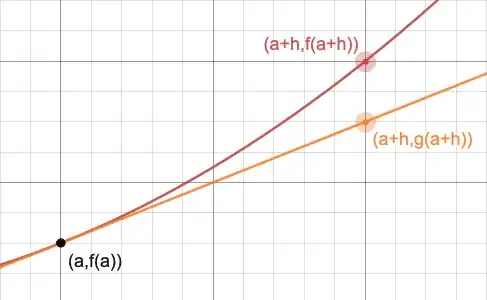

Given a curve $y=f(x)$, the equation of the line tangent to the point $(a,f(a))$ is given by $$ g(x)=f'(a)(x-a)+f(a) \, . $$ This tangent line provides us with a linear approximation of a function around a given point. For small $h$, the value of $f(a+h)$ is roughly equal to $g(a+h)$. Hence, $$ f(a+h) \approx f(a) + f'(a)h $$ The linear approximation formula has a very clear geometric meaning, where you imagine staying on the tangent line rather than the curve itself:

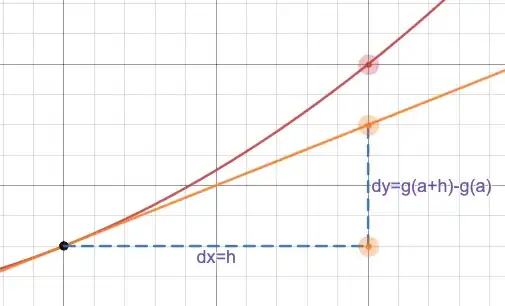

We can then define $dx=h$ and $dy=g(a+h)-g(a)$:

Since the tangent line has a constant gradient, we have $$ \frac{dy}{dx}=\frac{g(a+h)-g(a)}{h}=g'(a)=f'(a) \, , $$ provided that $dx$ is non-zero. Here, $dy$ and $dx$ are genuine quantities that act along the tangent line to the curve, and can be manipulated like numbers becasuse they are numbers.

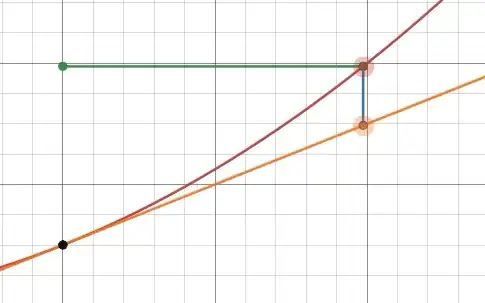

This notion of $dy/dx$ becomes very useful when you realise that, in a very meaningful sense, the tangent line is the best linear approximation of a function around a given point. We can turn the linear approximation formula into an exact equality by letting $r(h)$ be the remainder term: $$ f(a+h)=g(a+h)+r(h)=f(a)+f'(a)h+r(h) \, . $$ As $h\to0$, $r(h)\to0$. In fact, the remainder term satisifes a stronger condition: $$ \lim_{h \to 0}\frac{r(h)}{h}=0 \, . $$ The fact that the above limit is equal to $0$ demonstrates just how good the tangent line is at approximating the local behaviour of a function. It's not that impressive that when $h$ is small, $r(h)$ is also very small (any good approximation should have this property). What makes the tangent line unique is that when $h$ is small, $r(h)$ is orders of magnitude smaller, meaning that the 'relative error' is tiny. This relative error is showcased in the below animation, where the length of the green line is $h$, whereas the length of the blue line is $r(h)$:

Seen in this light, the statement $$ \frac{dy}{dx}=f'(a) $$ is an expression of equality between the quotient of two numbers $dy$ and $dx$, and the derivative $f'(a)$. It makes perfect sense to multiply both sides by $dx$, to get $$ dy=f'(a)dx \, , $$ Note that $dy+r(h)=f(a+h)-f(a)$, and so \begin{align} f(a+h)-f(a) - r(h) &= f'(a)h \\ f(a+h) &= f(a) + f'(a)h + r(h) \, , \end{align} which gives us another notion of the derivative, through the lense of linear approximation.

- 14,185

- 2

- 28

- 65

The best way to understand $d$ is that of being an operator, with a simple rule

$$df(x)=f'(x)dx$$

If you take this definition then $dy/dx$ is indeed a ratio as it is stripping $f'(x)dx$ of $dx$

$$\frac{dy}{dx}=\frac{y'dx}{dx}=y'$$

This is done in the same manner as $12/3$ is stripping $12=4\cdot3$ of $3$

In a certain context, $\frac{dy}{dx}$ is a ratio.

$\frac{dy}{dx}=s$ means:

Standard calculus

$\forall \epsilon\ \exists \delta\ \forall dx$

If $0<|dx|\leq\delta$

If $(x, y) = (x_0, y_0)$, but $(x, y)$ also could have been $(x_0+dx, y_0+\Delta y)$

If $dy = sdx$

Then $\left|\frac{\Delta y}{dx}-\frac{dy}{dx}\right|\leq \epsilon$

Nonstandard calculus

$\forall dx$ where $dx$ is a nonzero infinitessimal

$\exists \epsilon$ where $\epsilon$ is infinitessimal

If $(x, y) = (x_0, y_0)$, but $(x, y)$ also could have been $(x_0+dx, y_0+\Delta y)$

If $dy = sdx$

Then $\frac{\Delta y}{dx} - \frac{dy}{dx} = \epsilon$

In either case, $dx$ gets its meaning from the restriction placed on it (which is described using quantifiers), and $dy$ gets its meaning from the value of $s$ and the restriction placed on $dx$.

Therefore, it makes sense to make a statement about $\frac{dy}{dx}$ as a ratio if the statement is appropriately quantified and $dx$ is appropriately restricted.

Less formally, $dx$ is understood as "the amount by which $x$ is nudged", $dx$ is understood as "the amount by which $y$ is nudged on the tangent line", and $\Delta y$ is understood as "the amount by which $y$ is nudged on the curve". This is a perfectly sensible way to talk about a rough intuition.

- 196

- 1

- 6

Of course it is a fraction in appropriate definition.

Let me add my view of answer, which is updated text from some other question.

Accordingly, for example, Murray H. Protter, Charles B. Jr. Morrey - Intermediate Calculus-(2012) page 231 differential for function $f:\mathbb{R} \to \mathbb{R}$ is defined as function of two variables selected in special way by formula: $$df(x)(h)=f'(x)h$$ so it is linear function with respect to $h$ approximating $f$ in point $x$. Also it can be called 1-form.

This is fully rigorous definition, which does not required anything, then definition/existence of derivative. But here is more: if we define differential as existence of linear approximation in point $x=x_0$ for which holds $$f(x)-f(x_0) = A(x-x_0) + o(x-x_0), x \to x_0$$ then from this we obtain, that $f$ have derivative in point $x=x_0$ and $A=f'(x_0)$. So existence of derivative and existence of differential are equivalence requirements. Rudin W. - Principles of mathematical analysis-(1976) page 213.

If we use this definition for identity function $g(x)=x$, then we obtain $$dg(x)(h)=dx(h)=g'(x)h=h$$ This gives possibility to understand record $\frac{dy}{dx}=\frac{df}{dx}$ exactly as usual fraction of differentials and holds equality $\frac{df(x)}{dx}=f'(x)$. Exact record is $\frac{df(x)(h)}{dx(h)}=\frac{f'(x)h}{h}=f'(x)$.

Let me note, that we are speaking about single variable approach, not multivariable.

I cannot explain why someone assert, that $\frac{dy}{dx}$ cannot be understand as fraction - may be lack of knowledge about differential definition? For any case I bring, additionally to above source, list of books where is definition of differential which gives possibility understand fraction in question:

- James R. Munkres - Analysis on manifolds-(1997) 252-253 p.

- Vladimir A. Zorich - Mathematical Analysis I- (2016) 176 p.

- Loring W. Tu (auth.) - An introduction to manifolds-(2011) 34 p.

- Herbert Amann, Joachim Escher - Analysis II (v. 2) -(2008) 38 p.

- Robert Creighton Buck, Ellen F. Buck - Advanced Calculus-(1978) 343 p.

- Rudin W. - Principles of mathematical analysis-(1976) 213 p.

- Fichtenholz Gr. M - Course of Differential and Integral Calculus vol. 1 2003 240-241 p.

- Richard Courant - Differential and Integral Calculus, Vol. I, 2nd Edition -Interscience Publishers (1937), page 107

- John M.H. Olmsted - Advanced calculus-Prentice Hall (1961), page 90.

- David Guichard - Single and Multivariable Calculus_ Early Transcendentals (2017), page 144

- Stewart, James - Calculus-Cengage Learning (2016), page 190

- Differential in Calculus

For complete justice I mention Michael Spivak - Calculus (2008) 155 p. where author is against understanding of fractions, but argument is from kind "it is not, because it cannot be". Spivak one of my most respected and favorite authors, but "Amicus Plato, sed magis amica veritas".

- 11,079

- 2

- 11

- 26

While it can be approximated by a ratio, the derivative itself is actuality the limit of a ratio. This is made more apparent when one moves to partial derivatives and $\frac{1}{\partial y/ \partial x}\neq\partial x / \partial y$ The concept of $dx$ being an actual number and the derivative being an actual ratio is mainly a heuristic device that can get useful intuitions if used properly.

- 152

- 7

If I give my answer from an eye of physicist ,then you may think of following-

For a particle moving along $x$-axis with variable velocity, We define instantaneous velocity $v$ of an object as rate of change of x-coordinate of particle at that instant and since we define "rate of change", therefore it must be equal to total change divided by time taken to bring that change. since we have to calculate the instantaneous velocity. we assume instant to mean " an infinitesimally short interval of time for which particle can be assumed to be moving with constant velocity and denote this infinitesimal time interval by $dt$. Now particle cannot go more than an infinitesimal distance $dx$ in an infinitesimally small time. therefore we define instantaneous velocity as

$v=\frac{dx}{dt}$ i.e. as ratio of two infinitesimal changes.

This also helps us to get correct units for velocity since for change in position it will be $m$ and for change in time it will be $s$.

While defining pressure at a point, acceleration, momentum, electric current through a cross section etc. we assume ratio of two infinitesimal quantities.

So I think for practical purposes you may assume it to be a ratio but what actually it is has been well clarified in other answers.

Also from my knowledge of mathematics, when I learnt about differentiating a function also provides slope of tangent, I was told that Leibniz assumed the smooth curve of some function to be made of infinite number infinitesimally small lines joined together ,extending any one of them gives tangent to curve and the slope of that infinitesimally small line = $\frac{dy}{dx}$ = slope of tangent that we get on extending that line.

Even I learnt that we would need a magnifying glass of "infinite zoom in power" to see those lines which may not be possible in real.

- 3,383

- 1

- 8

- 31

It is incorrect to call it a ratio but it's very handy to visualize it as a ratio where the $\frac{l}{\delta} $ talks about the times a certain $l>0$ can be divided by saying an exceedingly small $\delta>0$ such that there is an exceedingly small remainder. Now if even $l$ is exceedingly small then the relative difference of the two changes can be measured using this "ratio". The idea of limits suggests that these two values $l$ and $\delta$, are themselves changing or $l$ changes with $\delta$ and the sole reason this can't be a ratio is that ratios are defined for constant fractions whilst $\frac{d}{dx} $ is a changing fraction with no concept of a remainder.