What is the importance of eigenvalues/eigenvectors?

-

9http://en.wikipedia.org/wiki/Eigenvalues_and_eigenvectors have you looked at this? It offers a pretty complete answer to the question. – InterestedGuest Feb 23 '11 at 02:34

-

1Here is a nice explanation: http://hubpages.com/hub/What-the-Heck-are-Eigenvalues-and-Eigenvectors – NebulousReveal Feb 23 '11 at 02:41

-

25Huh. I am extremely surprised this question hasn't already come up. – Qiaochu Yuan Feb 23 '11 at 03:06

-

21I realize this isn't my question, but I would *love* to see answers addressing the specific question, "How do you motivate eigenvalues and eigenvectors to a group of students who are only familiar with very basic matrix theory and who don't know anything about vector spaces or linear transformations?" – Jason DeVito Feb 23 '11 at 04:52

-

6@Jason: Then you should post *that* as a question! – Arturo Magidin Feb 23 '11 at 06:01

-

These are the invariants of the important transformations... – DVD Jul 04 '15 at 21:40

-

if you are interested in the general application of eigenvealues in the real world then:-https://youtu.be/DwJbHrdj3EU – DRPR May 23 '18 at 05:53

-

2[This video by 3b1b](https://www.youtube.com/watch?v=PFDu9oVAE-g&list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab&index=14) is by far the best explanation I have seen for its size. – Aditya P Apr 23 '19 at 14:12

11 Answers

Short Answer

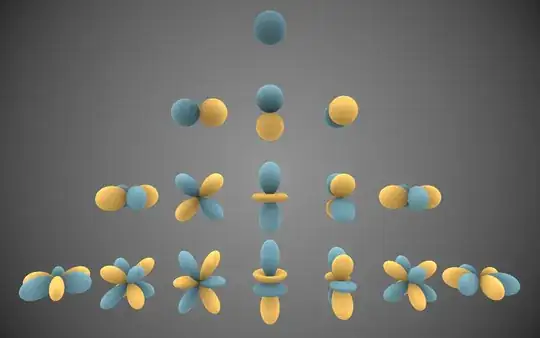

Eigenvectors make understanding linear transformations easy. They are the "axes" (directions) along which a linear transformation acts simply by "stretching/compressing" and/or "flipping"; eigenvalues give you the factors by which this compression occurs.

The more directions you have along which you understand the behavior of a linear transformation, the easier it is to understand the linear transformation; so you want to have as many linearly independent eigenvectors as possible associated to a single linear transformation.

Slightly Longer Answer

There are a lot of problems that can be modeled with linear transformations, and the eigenvectors give very simply solutions. For example, consider the system of linear differential equations \begin{align*} \frac{dx}{dt} &= ax + by\\\ \frac{dy}{dt} &= cx + dy. \end{align*} This kind of system arises when you describe, for example, the growth of population of two species that affect one another. For example, you might have that species $x$ is a predator on species $y$; the more $x$ you have, the fewer $y$ will be around to reproduce; but the fewer $y$ that are around, the less food there is for $x$, so fewer $x$s will reproduce; but then fewer $x$s are around so that takes pressure off $y$, which increases; but then there is more food for $x$, so $x$ increases; and so on and so forth. It also arises when you have certain physical phenomena, such a particle on a moving fluid, where the velocity vector depends on the position along the fluid.

Solving this system directly is complicated. But suppose that you could do a change of variable so that instead of working with $x$ and $y$, you could work with $z$ and $w$ (which depend linearly on $x$ and also $y$; that is, $z=\alpha x+\beta y$ for some constants $\alpha$ and $\beta$, and $w=\gamma x + \delta y$, for some constants $\gamma$ and $\delta$) and the system transformed into something like \begin{align*} \frac{dz}{dt} &= \kappa z\\\ \frac{dw}{dt} &= \lambda w \end{align*} that is, you can "decouple" the system, so that now you are dealing with two independent functions. Then solving this problem becomes rather easy: $z=Ae^{\kappa t}$, and $w=Be^{\lambda t}$. Then you can use the formulas for $z$ and $w$ to find expressions for $x$ and $y$..

Can this be done? Well, it amounts precisely to finding two linearly independent eigenvectors for the matrix $\left(\begin{array}{cc}a & b\\c & d\end{array}\right)$! $z$ and $w$ correspond to the eigenvectors, and $\kappa$ and $\lambda$ to the eigenvalues. By taking an expression that "mixes" $x$ and $y$, and "decoupling it" into one that acts independently on two different functions, the problem becomes a lot easier.

That is the essence of what one hopes to do with the eigenvectors and eigenvalues: "decouple" the ways in which the linear transformation acts into a number of independent actions along separate "directions", that can be dealt with independently. A lot of problems come down to figuring out these "lines of independent action", and understanding them can really help you figure out what the matrix/linear transformation is "really" doing.

- 3

- 2

- 356,881

- 50

- 750

- 1,081

-

45+1: This is the clearest explanation I have seen so far about the connection between systems of differential equations and eigenvectors - impressive! – vonjd May 15 '11 at 10:23

-

2I was a bit puzzled after reading the justification that the simplified system was not dz/dt=kappa*w ; dw/dt=lambda*z. – DWin Jan 09 '15 at 23:44

-

-

A short explanation

Consider a matrix $A$, for an example one representing a physical transformation (e.g rotation). When this matrix is used to transform a given vector $x$ the result is $y = A x$.

Now an interesting question is

Are there any vectors $x$ which does not change their direction under this transformation, but allow the vector magnitude to vary by scalar $ \lambda $?

Such a question is of the form $$A x = \lambda x $$

So, such special $x$ are called eigenvector(s) and the change in magnitude depends on the eigenvalue $ \lambda $.

- 1

- 3

- 35

- 77

- 2,030

- 1

- 15

- 23

-

4

-

14

-

2@kaka: and perpendicular ones to which the projection is null, I believe. I would like just to say that this short explanation was great! I find this good simple example very precious to serve as a motivation for eigenvalues, matrizes, etc. Thank you! – Pedro Lopes Jan 25 '16 at 12:13

-

7A useless regurgitation of the eigenvector/eigenvalue relation is helpful how exactly? Why bother? Jeezus. – Rick O'Shea Jan 07 '18 at 19:08

-

3

-

1

The behaviour of a linear transformation can be obscured by the choice of basis. For some transformations, this behaviour can be made clear by choosing a basis of eigenvectors: the linear transformation is then a (non-uniform in general) scaling along the directions of the eigenvectors. The eigenvalues are the scale factors.

- 208,399

- 15

- 224

- 525

-

13For someone just beginning linear algebra, this doesn't really make sense or motivate eigenvectors. For example, it could make the student naively ask, "why does the basis matter at all? a rotation by 90 degrees is the same rotation no matter what basis I use to look at it; it's never going to become a scaling in any basis..." and then you have a lot more explanation to do. It would be nice to be able to address this without assuming they already know a lot of linear algebra. – user541686 Apr 25 '15 at 10:02

-

1By throwing in non-uniform, this is the only answer here that makes me appreciate Jordan Blocks. As the existence of a Jordan Block signals that some transformations act on certain combinations of axes that are inherently non-decomposable. – Jun 13 '20 at 05:26

I think if you want a better answer, you need to tell us more precisely what you may have in mind: are you interested in theoretical aspects of eigenvalues; do you have a specific application in mind? Matrices by themselves are just arrays of numbers, which take meaning once you set up a context. Without the context, it seems difficult to give you a good answer. If you use matrices to describe adjacency relations, then eigenvalues/vectors may mean one thing; if you use them to represent linear maps something else, etc.

One possible application: In some cases, you may be able to diagonalize your matrix $M$ using the eigenvalues, which gives you a nice expression for $M^k$. Specifically, you may be able to decompose your matrix into a product $SDS^{-1}$ , where $D$ is diagonal, with entries the eigenvalues, and $S$ is the matrix with the associated respective eigenvectors. I hope it is not a problem to post this as a comment. I got a couple of Courics here last time for posting a comment in the answer site.

Mr. Arturo: Interesting approach!. This seems to connect with the theory of characteristic curves in PDE's(who knows if it can be generalized to dimensions higher than 1), which are curves along which a PDE becomes an ODE, i.e., as you so brilliantly said, curves along which the PDE decouples.

- 650

- 2

- 6

- 21

- 271

- 2

- 5

-

yes, the method of characteristics can be generalized to dimensions higher than 1. In general the method of characteristics for partial differential equations can be had for arbitrary first-order quasilinear scalar PDEs defined on any smooth manifold. It however will not necessarily work for systems of PDEs or higher order PDEs. – Willie Wong Feb 23 '11 at 11:03

-

(It can in fact also be extended to fully nonlinear first order scalar PDEs, but changing the point of view from vector fields to Monge cones is a bit hard to visualize at first.) – Willie Wong Feb 23 '11 at 11:06

When you apply transformations to the systems/objects represented by matrices, and you need some characteristics of these matrices you have to calculate eigenvectors (eigenvalues).

"Having an eigenvalue is an accidental property of a real matrix (since it may fail to have an eigenvalue), but every complex matrix has an eigenvalue."(Wikipedia)

Eigenvalues characterize important properties of linear transformations, such as whether a system of linear equations has a unique solution or not. In many applications eigenvalues also describe physical properties of a mathematical model.

Some important applications -

Principal Components Analysis (PCA) in object/image recognition;

Physics - stability analysis, the physics of rotating bodies;

Market risk analysis - to define if a matrix is positive definite;

PageRank from Google.

- 221

- 6

- 8

-

thanks for your answer, but aren't eigenvectors/eigenvalues calculated by iteratively multiplying the matrix by a random vector? https://en.wikipedia.org/wiki/Power_iteration In other words, it's just as fast to start with a random distribution and look at where all the traffic ends up after several iterations as it is to use a sophisticated algorithm using eigenvalues? – Hao S Mar 20 '21 at 20:55

In data analysis, the eigenvectors of a covariance (or correlation matrix) are usually calculated.

Eigenvectors are the set of basis functions that are the most efficient set to describe data variability. They are also the coordinate system that the covariance matrix becomes diagonal allowing the new variables referenced to this coordinate system to be uncorrelated. The eigenvalues is a measure of the data variance explained by each of the new coordinate axis.

They are used to reduce the dimension of large data sets by selecting only a few modes with significant eigenvalues and to find new variables that are uncorrelated; very helpful for least-square regressions of badly conditioned systems. It should be noted that the link between these statistical modes and the true dynamical modes of a system is not always straightforward because of sampling problems.

- 478

- 1

- 4

- 15

Lets Go Back to the Historical Background to get the motivation!

Consider The Linear Transformation T:V->V.

Given a Basis of V, T is characterized beautifully by a Matrix A,which tells everything about T.

The Bad part about A is that " A changes with the change of Basis of V ".

Why it is bad?

Because the Same Linear Transformation due to two different basis selection are given by two distinct matrices. Believe me! You cannot relate between the two matrices by looking at their entries. Really Not Interesting!

Intuitively, there exist some strong relation between two such Matrices.

Our Aim is to capture THE THING in common(Mathematically).

Now Eigen Values are a necessary condition to check so but not sufficient though!

Let make my statement clear.

"The Invariance of a Subspace of V under a Linear Transformation of V" is such a property.

That is if A and B represent the same T, then they must have all the eigenvalues equal. But The Converse is Not True! Hence not Sufficient but NECESSARY!

And The Relation Is " A is Similar to B ". i.e. $ A=PBP^{-1} $ where P is a non-Singular Matrix.

- 450

- 4

- 11

Eigenvalues and eigenvectors are central to the definition of measurement in quantum mechanics

Measurements are what you do during experiments, so this is obviously of central importance to a Physics subject.

The state of a system is a vector in Hilbert space, an infinite dimensional space square integrable functions.

Then, the definition of "doing a measurement" is to apply a self-adjoint operator to the state, and after a measurement is done:

- the state collapses to an eigenvalue of the self adjoint operator (this is the formal description of the observer effect)

- the result of the measurement is the eigenvalue of the self adjoint operator

Self adjoint operators have the following two key properties that allows them to make sense as measurements as a consequence of infinite dimensional generalizations of the spectral theorem:

- their eigenvectors form an orthonormal basis of the Hilbert space, therefore if there is any component in one direction, the state has a probability of collapsing to any of those directions

- the eigenvalues are real: our instruments tend to give real numbers are results :-)

As a more concrete and super important example, we can take the explicit solution of the Schrodinger equation for the hydrogen atom. In that case, the eigenvalues of the energy operator are proportional to spherical harmonics:

Therefore, if we were to measure the energy of the electron, we are certain that:

the measurement would have one of the energy eigenvalues

The energy difference between two energy levels matches experimental observations of the hydrogen spectral series and is one of the great triumphs of the Schrodinger equation

the wave function would collapse to one of those functions after the measurement, which is one of the eigenvalues of the energy operator

Bibliography: https://en.wikipedia.org/wiki/Measurement_in_quantum_mechanics

The time-independent Schrodinger equation is an eigenvalue equation

The general Schrodinger equation can be simplified by separation of variables to the time independent Schrodinger equation, without any loss of generality:

$$ \left[ \frac{-\hbar^2}{2m}\nabla^2 + V(\mathbf{r}) \right] \Psi(\mathbf{r}) = E \Psi(\mathbf{r}) $$

The left side of that equation is a linear operator (infinite dimensional matrix acting on vectors of a Hilbert space) acting on the vector $\Psi$ (a function, i.e. a vector of a Hilbert space). And since E is a constant (the energy), this is just an eigenvalue equation.

Have a look at: Real world application of Fourier series to get a feeling for separation of variables works for a simpler equation like the heat equation.

Heuristic argument of why Google PageRank comes down to a diagonalization problem

PageRank was mentioned at: at https://math.stackexchange.com/a/263154/53203 but I wanted to add one cute handy wave intuition.

PageRank is designed to have the following properties:

- the more links a page has incoming, the greater its score

- the greater its score, the more the page boosts the rank of other pages

The difficulty then becomes that pages can affect each other circularly, for example suppose:

- A links to B

- B links to C

- C links to A

Therefore, in such a case

- the score of B depends on the score A

- which in turn depends on the score of A

- which in turn depends on C

- which depends on B

- so the score of B depends on itself!

Therefore, one can feel that theoretically, an "iterative approach" cannot work: we need to somehow solve the entire system in one go.

And one may hope, that once we assign the correct importance to all nodes, and if the transition probabilities are linear, an equilibrium may be reached:

Transition matrix * Importance vector = 1 * Importance vector

which is an eigenvalue equation with eigenvalue 1.

Markov chain convergence

https://en.wikipedia.org/wiki/Markov_chain

This is closely related to the above Google PageRank use-case.

The equilibrium also happens on the vector with eigenvalue 1, and convergence speed is dominated by the ratio of the two largest eigenvalues.

See also: https://www.stat.auckland.ac.nz/~fewster/325/notes/ch9.pdf

- 777

- 8

- 12

I would like to direct you to an answer that I posted here: Importance of eigenvalues

I feel it is a nice example to motivate students who ask this question, in fact I wish it were asked more often. Personally, I hold such students in very high regard.

-

I would like to point out the useless nature of your "natural frequency" and Tacoma Narrows Bridge regurgitation. I'm going to venture a guess you have no grasp of this topic. – Rick O'Shea Jan 07 '18 at 19:25

-

1

This made it clear for me https://www.youtube.com/watch?v=PFDu9oVAE-g

There's a lot of other linear algebra videos as well from 3 blue 1 brown

- 163

- 7

An eigenvector $v$ of a matrix $A$ is a directions unchanged by the linear transformation: $Av=\lambda v$. An eigenvalue of a matrix is unchanged by a change of coordinates: $\lambda v =Av \Rightarrow \lambda (Bu) = A(Bu)$. These are important invariants of linear transformations.

- 1,097

- 7

- 25